AMD unveils Instinct MI350 GPUs & Helios to spearhead open AI

AMD has set out its vision for an open AI ecosystem, detailing a suite of new semiconductor, software and systems product introductions across its portfolio.

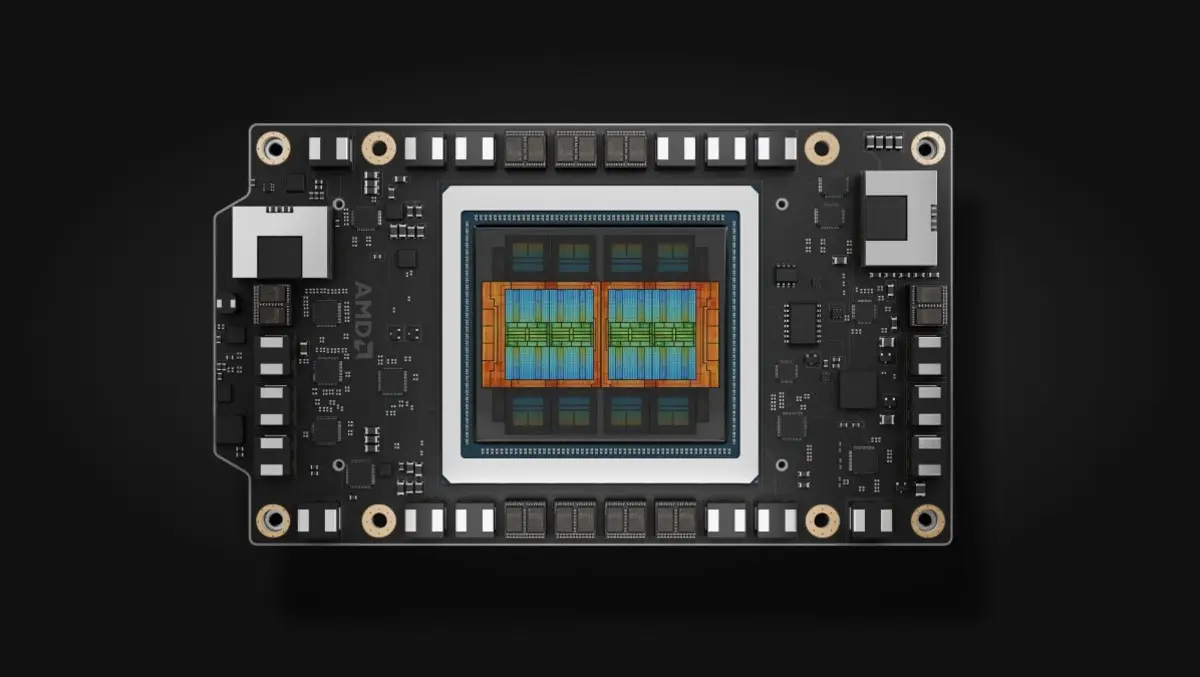

The company has launched its Instinct MI350 Series GPUs, which include the MI350X and MI355X variants, promising a fourfold increase in AI computing performance compared to the previous generation. The MI350 Series will be available in broad deployments in the second half of 2025, including in hyperscale environments such as Oracle Cloud Infrastructure (OCI).

AMD also previewed its next-generation rack-scale AI platform, dubbed "Helios," which will be based on the upcoming Instinct MI400 Series GPUs, Zen 6-based EPYC "Venice" CPUs, and Pensando "Vulcano" network interface cards (NICs). According to AMD, the Helios solution is expected to deliver up to ten times more inference performance on Mixture of Experts models, when it becomes available in 2026.

Rack-scale infrastructure

The company demonstrated fully integrated, open-standards, rack-scale AI infrastructure already rolling out in the market. This infrastructure features the just-announced Instinct MI350 Series accelerators, AMD's 5th Gen EPYC processors, and AMD Pensando Pollara NICs, which together are being adopted by hyperscalers such as Oracle Cloud Infrastructure.

Oracle announced it will offer zettascale AI clusters accelerated by the latest Instinct processors with up to 131,072 MI355X GPUs, enabling customers to build, train, and deploy AI models at a significant scale.

Open software initiatives

On the software side, AMD has introduced ROCm 7.0, the latest version of its open-source AI software stack. ROCm 7.0 supports industry-standard frameworks, broadens hardware compatibility, and introduces new development tools, drivers, APIs, and libraries designed to advance AI development and deployment.

The company also announced the broad availability of the AMD Developer Cloud, designed for both global developer and open-source communities. This cloud environment is intended to provide users with access to next-generation computing resources for AI projects.

Dr. Lisa Su, AMD Chair and CEO, stated,

"AMD is driving AI innovation at an unprecedented pace, highlighted by the launch of our AMD Instinct MI350 series accelerators, advances in our next generation AMD 'Helios' rack-scale solutions, and growing momentum for our ROCm open software stack. We are entering the next phase of AI, driven by open standards, shared innovation and AMD's expanding leadership across a broad ecosystem of hardware and software partners who are collaborating to define the future of AI."

Energy efficiency goals

The Instinct MI350 Series has surpassed AMD's prior goal of increasing the energy efficiency of AI training and high-performance computing nodes by 30 times over five years, ultimately delivering a 38-fold improvement. Looking further ahead, AMD has set a new goal for 2030: a 20-fold increase in rack-scale energy efficiency, beginning from a 2024 baseline. If realised, this would allow a typical AI model, currently requiring more than 275 racks to train, to be managed in fewer than one rack by 2030 using 95% less electricity.

Collaborations and adoption

AMD noted that seven of the ten largest AI customers are now deploying Instinct accelerators. During the demonstration, representatives from companies including Meta, OpenAI, Microsoft, Oracle, Cohere, HUMAIN, Red Hat, Astera Labs, and Marvell discussed their ongoing collaborations with AMD.

Meta reported that Instinct MI300X is broadly deployed for Llama 3 and Llama 4 inference, and emphasised enthusiasm about the MI350's compute power, performance per total cost of ownership, and memory. Meta indicated ongoing collaboration on future AI roadmaps, with plans for the Instinct MI400 Series platform.

OpenAI CEO Sam Altman outlined the importance of integrating hardware, software, and algorithms, and discussed OpenAI's partnership with AMD for AI infrastructure. He noted that research and GPT models are running on Azure in production on the MI300X, with deeper design engagement expected on the MI400 Series platforms.

HUMAIN described an agreement with AMD to build open, scalable, and cost-efficient AI infrastructure using the full range of AMD's computing platforms.

Microsoft announced that Instinct MI300X is now deployed across proprietary and open-source AI models in production environments on Azure. Cohere shared that its Command models, used for enterprise-grade large language model inference, are running on Instinct MI300X.

Red Hat explained that expanded collaboration with AMD is enabling production-ready AI environments, with AMD Instinct GPUs on Red Hat OpenShift AI serving hybrid cloud deployments. Astera Labs and Marvell also reinforced their work with AMD to provide next-generation AI interconnects and infrastructure solutions through the open UALink ecosystem.

AMD continues to promote the role of open standards and partnerships as it aims to drive further progress in AI performance, efficiency, and accessibility through advances in both hardware and software platforms.