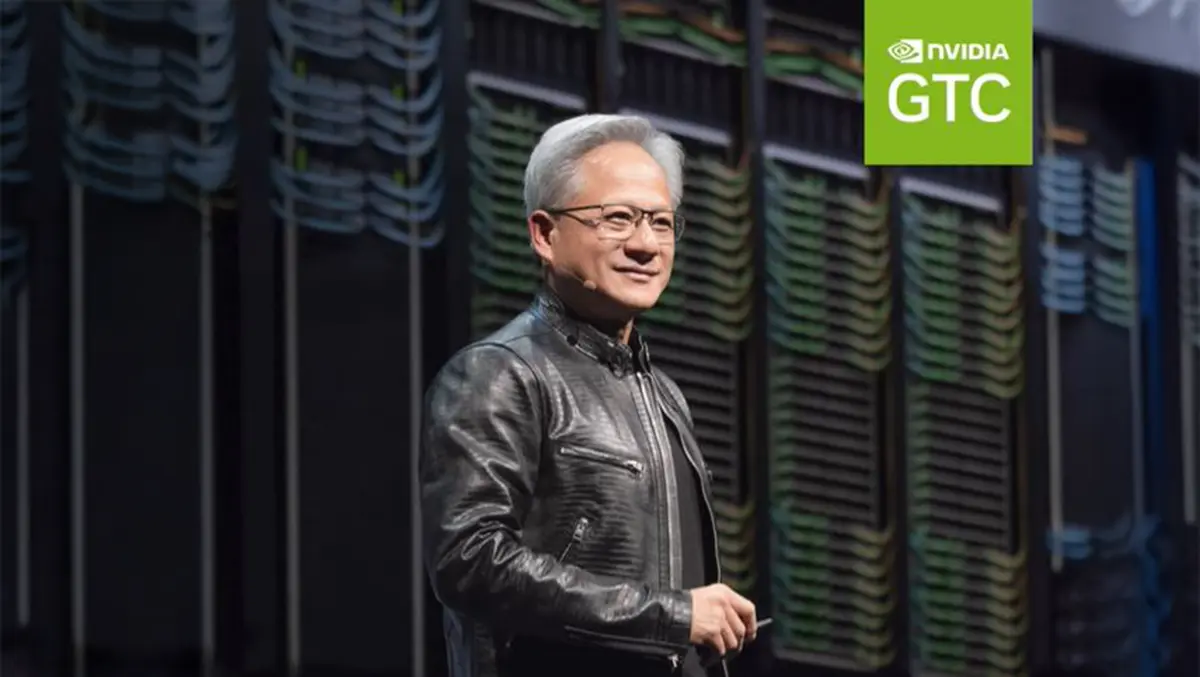

NVIDIA CEO reveals Blackwell AI platform during keynote

Speaking at Nvidia's annual GTC conference, CEO Jensen Huang revealed Nvidia's Blackwell AI platform, an innovation he described as "the ultimate scale-up" for data centres and artificial intelligence (AI) workloads.

"Blackwell is the result of years of engineering and innovation," Huang said.

"It's designed to tackle the immense computational demands of agentic AI and reasoning systems."

The Rise of Blackwell

The Blackwell platform, named after mathematician David Blackwell, is Nvidia's latest generation of GPU architecture.

According to Huang, it is designed to address the rapidly growing demand for computation as AI systems increasingly require extensive reasoning capabilities.

"AI models are becoming smarter and require more tokens for reasoning," Huang explained. "Blackwell will enable those systems to generate content faster and more efficiently."

The Blackwell platform introduces several key advancements, including a new precision format known as FP4, which optimises performance by reducing the energy required for computations. "With FP4, we can dramatically reduce power consumption while enhancing model performance," Huang added.

Scaling Up with MVLink

Nvidia has taken a significant step forward by introducing the MVLink 72 architecture, which enables extreme scale-up capabilities for data centres. This new design integrates disaggregated switches and compute nodes, dramatically improving bandwidth and system flexibility.

"Our goal is to create a massive GPU that feels like one big system," Huang explained. "We've taken our successful Hopper architecture and scaled it up by a factor of 25."

In practical terms, this means greater throughput and faster token generation for AI models. "This is what AI factories will look like in the future," Huang said. "The more you build, the more tokens you generate, and the more efficient your system becomes."

Advancing AI Efficiency

One of Nvidia's most significant breakthroughs is its new operating system for AI factories, named Nvidia Dynamo. Huang described Dynamo as a game-changing technology designed to manage complex AI workloads dynamically.

"Dynamo enables us to allocate GPU resources intelligently," he said. "We can now assign more GPUs to processes that require intensive computation while reserving resources for decoding and token generation."

This innovation has the potential to reduce energy consumption dramatically while improving performance. "Dynamo combined with Blackwell offers a 40-times improvement in AI inference over the previous Hopper platform," Huang claimed.

Co-Packaged Optics for Future Networks

Recognising the increasing demands for data centre connectivity, Nvidia also announced its first co-packaged optics platform using silicon photonics. This advancement replaces traditional transceivers with fibre connections that significantly improve power efficiency.

"By eliminating costly transceivers, we've dramatically reduced power consumption," Huang explained. "This allows data centres to dedicate more power to compute rather than signal transmission."

Huang estimated that by deploying silicon photonics, large-scale data centres could recover as much as 60 megawatts of energy capacity. "That's enough power to run 100 Reuben Ultra racks – a remarkable improvement," he added.

Preparing for the Future

In addition to Blackwell, Huang outlined Nvidia's roadmap for the coming years, revealing plans for the next-generation "Vera Rubin" platform in 2025, followed by "Reuben Ultra" in 2027. Each new architecture is expected to deliver exponential improvements in performance, efficiency, and scalability.

"The future of AI factories demands constant innovation," Huang said. "Our roadmap is designed to support enterprises as they build the infrastructure required to meet these demands."

Enterprise and Robotics Innovation

Nvidia also announced significant advances in enterprise computing, unveiling its DGX Station and DGX Spark systems – new AI-powered workstations designed for businesses to deploy AI models securely on-site.

"These are the computers of the AI age," Huang declared. "With 20 petaflops of computing power, DGX Station is designed to empower businesses of all sizes."

For robotics, Nvidia introduced Isaac Groot N1, a general-purpose AI model designed for humanoid robots. "Robots will need to perceive, reason and take action," Huang said. "Groot N1 gives them the ability to perform tasks in complex real-world environments."

Nvidia further enhanced its commitment to robotics with the launch of Newton, a physics engine created in collaboration with DeepMind and Disney Research. "Newton enables robots to learn motor skills and refine dexterity through reinforcement learning," Huang explained.

Open Source Commitment

In a move to encourage AI innovation, Nvidia announced that both its Groot N1 robot model and Newton physics engine would be open-sourced.

"By sharing these technologies with the global community, we hope to accelerate progress in robotics and AI," Huang said.

A New Era of Computing

Huang concluded by emphasising Nvidia's ambition to build a computing ecosystem that can support the increasing complexity of AI applications. "We're entering a new era where AI factories will generate content on demand, fundamentally changing how computing is done," he said.

"Our focus is on developing technology that enables businesses to build AI systems that are powerful, efficient, and scalable," Huang added.

Reflecting on Nvidia's journey, Huang noted the impact of their innovations on scientific research, adding, "One scientist told me, 'Because of your work, I can now do my life's work in my lifetime.'"

With Blackwell, Nvidia is poised to drive the next wave of AI advancement, promising businesses and researchers faster, smarter computing solutions than ever before.